Ethics in AI development is not a peripheral concern—it is central to the integrity, impact, and sustainability of the technology itself. As artificial intelligence becomes more embedded in decision-making processes across industries, the choices made during its design and deployment carry profound consequences. These systems influence hiring practices, loan approvals, medical diagnoses, law enforcement, and countless other areas that touch human lives. Without a strong ethical foundation, AI risks amplifying biases, eroding trust, and creating unintended harm. The role of ethics is to ensure that innovation does not outpace responsibility.

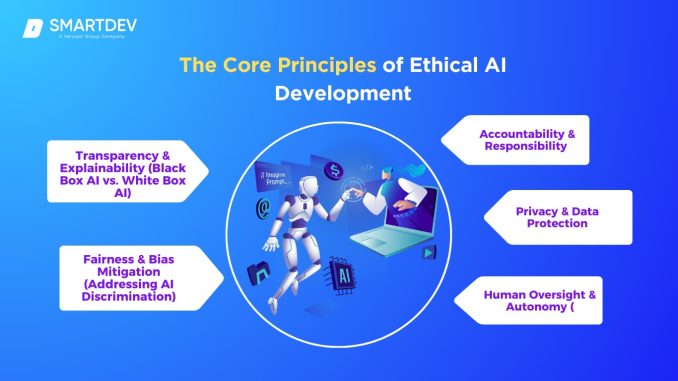

One of the most pressing ethical challenges in AI is bias. Machine learning models are trained on data, and that data often reflects historical inequalities or systemic prejudices. If not carefully managed, these biases can be encoded into algorithms and perpetuated at scale. For example, an AI system used in recruitment might favor candidates from certain backgrounds if the training data reflects past hiring preferences. Similarly, facial recognition technologies have shown disparities in accuracy across different demographic groups, raising concerns about fairness and accountability. Ethical AI development requires rigorous scrutiny of data sources, thoughtful model design, and continuous evaluation to mitigate these risks.

Transparency is another cornerstone of ethical AI. Many systems operate as black boxes, producing outputs without clear explanations of how decisions are made. This lack of interpretability can be problematic, especially in high-stakes contexts like healthcare or criminal justice. Stakeholders need to understand the rationale behind AI-driven decisions to assess their validity and challenge them when necessary. Ethical development encourages the use of explainable AI techniques, documentation of design choices, and open communication with users. It’s not just about technical clarity—it’s about fostering trust and enabling informed oversight.

Privacy concerns also loom large in AI development. These systems often rely on vast amounts of personal data to function effectively. Whether it’s browsing history, location data, or biometric information, the collection and use of sensitive data must be handled with care. Ethical AI respects user consent, minimizes data collection, and prioritizes security. It also considers the long-term implications of data use, including potential misuse or unintended exposure. Developers must navigate the tension between personalization and privacy, ensuring that convenience does not come at the cost of autonomy.

Accountability is essential in the ethical landscape of AI. When systems fail or cause harm, it must be clear who is responsible. This is particularly challenging in complex ecosystems where multiple actors contribute to the development and deployment of AI. Ethical frameworks help define roles, establish protocols, and create mechanisms for redress. They encourage organizations to take ownership of their technologies and to respond proactively when issues arise. Accountability is not just about assigning blame—it’s about creating a culture of responsibility that supports continuous improvement.

Inclusivity also plays a vital role in ethical AI development. The people who build these systems shape their behavior, and diverse teams are more likely to anticipate a wider range of needs and risks. Including voices from different backgrounds, disciplines, and communities enriches the development process and leads to more equitable outcomes. Ethical AI is not developed in isolation—it is informed by dialogue, collaboration, and a commitment to serving the broader public good. This means engaging with stakeholders, listening to concerns, and adapting designs to reflect real-world complexity.

The pace of AI innovation adds urgency to ethical considerations. As capabilities expand, so do the potential consequences. Autonomous systems, generative models, and predictive analytics are evolving rapidly, often outstripping regulatory frameworks and public understanding. Ethical development acts as a stabilizing force, guiding progress with foresight and care. It encourages developers to ask not just what is possible, but what is appropriate. It challenges organizations to consider long-term impacts, societal implications, and the values they want their technologies to embody.

Education and awareness are critical to embedding ethics in AI. Developers, business leaders, and policymakers need to understand the ethical dimensions of their work. This includes training in ethical reasoning, exposure to case studies, and engagement with interdisciplinary perspectives. Ethical literacy empowers teams to make informed decisions and to recognize the broader context of their innovations. It also supports a shared language for discussing challenges and solutions, fostering a more cohesive and responsible tech community.

Ultimately, the role of ethics in AI development is to align technology with human values. It’s about ensuring that progress serves people, respects rights, and promotes justice. Ethics provides the lens through which we evaluate impact, the compass that guides decision-making, and the foundation that supports trust. As AI continues to shape our world, its development must be grounded in principles that reflect our highest aspirations. That’s not just good practice—it’s essential for building systems that are not only intelligent, but wise.